Luca Del Pero, Susanna Ricco, Rahul Sukthankar, Vittorio Ferrari

University of Edinburgh (CALVIN), Google Research

aaa

1. Overview

We propose an automatic system for organizing the content of a collection of unstructured videos of an articulated object class (e.g. tiger, horse). By exploiting the recurring motion patterns of the class across videos, our system: 1) discovers its characteristic behaviors; and 2) recovers pixel-to-pixel alignments across different instances. Our system can be useful for organizing video collections for indexing and retrieval. Moreover, it can be a platform for learning the appearance or behaviors of object classes from Internet video. Traditional supervised techniques cannot exploit this wealth of data directly, as they require a large amount of time-consuming manual annotations. This work is discussed in detail in our CVPR 2015 [1] and IJCV 2016 [2] papers. The dataset used in these papers is available here for download.

aaa

2. Behavior Discovery

The behavior discovery stage generates temporal video intervals, each automatically trimmed to one instance of the discovered behavior, clustered by type. It relies on our novel motion representation for articulated motion based on the displacement of ordered pairs of trajectories (PoTs). This is the subject of our CVPR 2015 paper [1] (more experiments are presented in [2]).

In the videos below, we illustrate a few discovered clusters for three different classes: tiger, dog and horse.

Pair of trajectories (PoTs)

We represent articulated object motion using a collection of automatically selected ordered pairs of trajectories (PoTs), tracked over n frames. This is illustrated in this video. See the text below for more details.

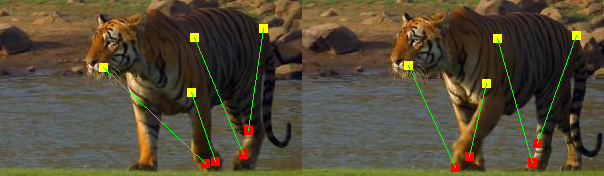

Only two trajectories following parts of the object moving relatively to each other are selected as a PoT, as these are the pairs that move in a consistent and distinctive manner across different instances of a specific motion pattern. For example, the motion of a pair connecting a tiger’s knee to its paw consistently recurs across videos of walking tigers

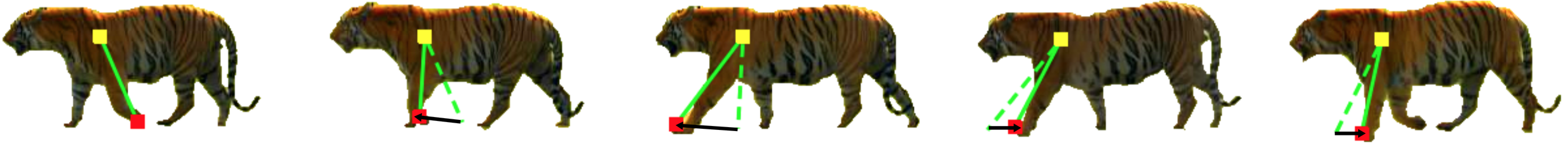

Our descriptor for a PoT concatenates the displacement vectors (in black) of the second trajectory in the pair (swing, in red) with respect to the first (anchor, in yellow). This is in contrast to state-of-the-art motion descriptors that use the displacement of individual trajectories, like Improved DTF trajectories [5].

Our descriptor for a PoT concatenates the displacement vectors (in black) of the second trajectory in the pair (swing, in red) with respect to the first (anchor, in yellow). This is in contrast to state-of-the-art motion descriptors that use the displacement of individual trajectories, like Improved DTF trajectories [5].

PoT selection

First, we use our method for video foreground segmentation [3], and then extract dense point trajectories [5] only on the foreground. We select as PoTs those pairs where one trajectory roughly follows the median velocity of the object, while the other moves differently. We approximate the median velocity with the median optical flow displacement of foreground pixels. This simple method allows to robustly select pairs where the two trajectories follow parts moving relatively to each other (e.g. paw-to-hip or shoulder-to-paw when walking, heat-to-neck when turning the head, like in the figure below). A descriptor for each video is computed by aggregating all extracted PoTs into a Bag-of-Words histogram.

|

|

|

Temporal segmentation and behavior discovery

An input video typically contains multiple behaviors (e.g. the tiger runs for a bit, then it stops, it sits down and starts rolling on the ground). We are interested in finding video intervals automatically trimmed to the duration of exactly one behavior. For this, we perform automatic temporal segmentation using motion cues. We first detect and remove video intervals with no interesting motion (pauses). Second, we detect and segment out video intervals showing periodic motion (e.g. walking, running). This is also done using PoTs descriptors. Last, we cluster all segmented video intervals, to discover recurring behaviors. The video below shows a few examples of detected periodic motion.

Evaluation

We evaluate the purity of the discovered clusters on a dataset of videos with ground-truth behavior annotations (e.g. walk, turn head), available here. We annotated the ground-truth behavior in each frame from 600 videos for three different object classes: dog, horse and tiger.

PoTs for local matching

While we cluster video intervals using a Bag-of-Words of PoTs, an individual PoT is a local feature that enables finding spatiotemporal correspondences. Below, we show a few pairs of video intervals that out method put in the same cluster. In each, we shaw the 10 pairs of PoTs that are closest in descriptor space. One can see how these PoTs correspond to the motion of the swinging leg in the walking cluster, and of the rotation of the head in the turning head cluster. The blue lines connect anchors, the green connect swings.

aaa

3. Spatial alignment

The alignment stage aligns hundreds of instances of the class performing similar behavior to a great accuracy, despite considerable appearance variations (e.g. an adult tiger and a cub). It uses a flexible Thin Plate Spline deformation model that can vary through time. We first put short intervals in the discovered behavior clusters in spatiotemporal correspondence. Using correspondences between trajectories, we discover a temporal Thin-Plate Splines that maps the pixels of a tiger in one video to those of a tiger in a different video with great accuracy. This is discussed in detail in our IJCV 2016 [2] paper. The alignment process is illustrated in the video below.

Evaluation

For evaluation, we manually annotated the 2D location of 19 landmarks in each frame of several tiger and horse videos (e.g. left eye, neck, front left ankle, etc.). Unlike coarser annotations, such as bounding boxes, the landmarks enable evaluating the alignment of objects with non-rigid parts with greater accuracy. Also this data is available here.

aaa

4. Acknowledgements

This work was partly funded by a Google Faculty Award, and by ERC Grant “Visual Culture for Image Understanding”. We thank Anestis Papazoglou for helping with the collection of the TigDog dataset.

aaa

5. References

- Articulated Motion Discovery using Pairs of Trajectories

, Rahul Sukthankar, Vittorio Ferrari,

In Computer Vision and Pattern Recognition (CVPR), 2015.

![pdf [pdf]](http://calvin-vision.net/bigstuff/proj-imagenet/icons/pdf.png)

- Behavior Discovery and Alignment of Articulated Object Classes from Unconstrained Video

, Rahul Sukthankar, Vittorio Ferrari,

International Journal of Computer Vision (IJCV), 2016.

![pdf [pdf]](http://calvin-vision.net/bigstuff/proj-imagenet/icons/pdf.png)

- Fast object segmentation in unconstrained video

,

In International Conference on Computer Vision (ICCV), 2013.

![pdf [pdf]](http://calvin-vision.net/bigstuff/proj-imagenet/icons/pdf.png)

![url [url]](http://calvin-vision.net/bigstuff/proj-imagenet/icons/url.png)

- Learning Object Class Detectors from Weakly Annotated Video

,

In Computer Vision and Pattern Recognition (CVPR), 2012.

![pdf [pdf]](http://calvin-vision.net/bigstuff/proj-imagenet/icons/pdf.png)

![url [url]](http://calvin-vision.net/bigstuff/proj-imagenet/icons/url.png)

- Action recognition with improved trajectories

,

In International Conference on Computer Vision (ICCV), 2013.